Here is my good news / bad news report about AI Agents. In summary, working with AI agents is a lot like working with Humans!

We collectively:

– Succeed often

– Fail often

– Understand so much and yet so little of what is being discussed

– Rarely admit misunderstanding until asked direct questions

– Randomly make the same mistake

– Randomly make brand new mistakes that make you question every previous success

Thankfully, our existing strategies are effective:

– Be grateful

– Be alert and resilient

– Trust but verify

– Commit to continuous improvement

My first few steps with Cursor AI were delightful, and I had a prototype of my sound generator tool created in 30 minutes. I have a sketch of this concept in my college sketchbook – it had been waiting decades to come to life, and it took less than an hour.

Cursor, Claude and I had selected programming languages and toolkits, installed the components for running local web pages, and created a simple user interface.

I was ready to share this first iteration, but decided to work on a one-click publishing process so I could easily push updates and new projects to my public wordpress site. (Scope Creep!) That task was far more challenging – we ran into enough issues that we added logging and a full-fledged test framework with unit and integration tests. At this point, I was still feeling shocked and pleased at our progress.

But while debugging these publishing issues, Cursor began making additional code changes that I hadn’t asked for. We created test scripts and when prompted to run the test scripts, Cursor would generate new test scripts in javascript instead of powershell.

I crafted better prompts, and we wrote documentation for creating builds, killing processes, restarting servers and running test procedures. This worked well for a while, but occasionally and unexpectedly, rather than follow my instructions, Cursor would search the code to find the functions and then call them incorrectly.

Still convinced that I was almost ready to churning out cool web applications daily, I hammered on my developer tooling long enough to run out of “fast requests”. There the rubber met the road, and my Excel AI costing modeler came to life, and my marker met the whiteboard. The whiteboard now reads: “I want consistent, reliable results from AI tools”.

Consistent results will require a mechanism to test and measure results – a wrapper around a given tool and my own benchmarks. Knowing that prompting nuances change from one model to the next and from one version to the next, custom benchmarking can build trust in new models and help us optimize quality, speed and cost.

I know that consistent prompting and contexts will produce more consistent results – which means that I shouldn’t be typing prompts anymore. I’ll need a prompt generator, an injector, and a monitor. So, while I wait for Cursor and Claude to slow-roll my code requests, I’m working with GPT 4 and o1 to start building my first AI Wrapper. We just logged our first API calls. At this point, my wrapper is useless, but I’m on a path. Next up – bootstrapping the AI wrapper with basic requirements so we can start auto-iterating, and a dashboard so we can watch progress.

Update from the future. With my AI Wrapper standing, sending and receiving, I am ready for more. But wait – what’s LangChain? What’s LangGraph? What about Theia?

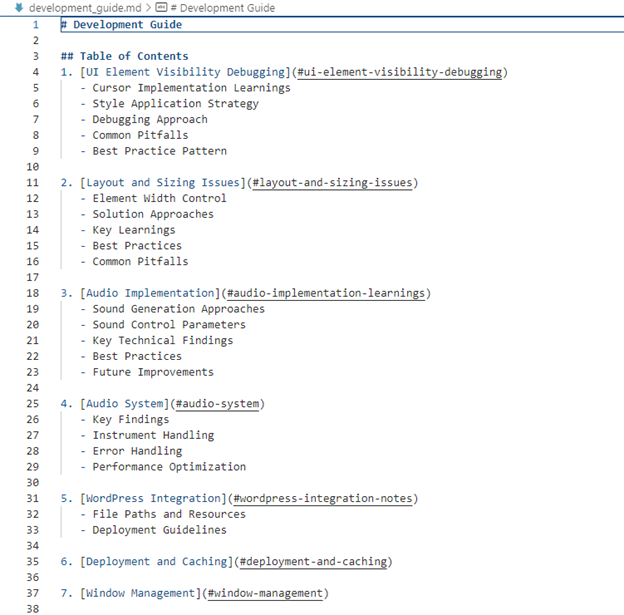

Bottom line? Software practices are more important than the code. Faster code just means faster technical debt, faster BugGen, and faster circling around the same circle. It can be fun, but you don’t get anywhere. I had to take a break and read up on git, branching strategies, CICD, TDD. Along the way I found a great guide which could have saved me some time.

What did I build? A sound maker, just for fun. The basics were working in a few hours, but Cursor and I went around in circles breaking it over and over again while trying to add new features. I sadly had to defer the midi-selector feature, but I know that I need to start from the ground up if I want reliable progress. It’s just too easy to ask for more features – the AI will gladly jam them in. It won’t follow the same pattern as last time, and I’ll be too lazy to read closely what it changed.

I need to give some thanks to the presenters in December who motivated me to make something, and get it all the way to a published state. Check out this excellent series: thinking-with-sand-a-virtual-talk-series-exploring-new-software-interfaces-and-tools-for-augmented-thinking-and-creative-exploration.

My sound-maker project forced me to tackle a good list of “firsts”: Creative Commons License, Git Branching and Backups, Cursor AI, Webpack, P5, Audio Oscillator, Sound Visualization, MIDI Audio (didn’t make the released version, but someday). It also forced me to bone up on some critical development patterns which I’ve left to the Engineers in the past. And finally, it gave me time on the side, while waiting for “slow responses” to also prototype my first: AI Wrapper, React UI, and AI Chatbot.

But even with all of that excitement, next on my list is a simple, robust machine. It has been on my mind for several years, I will share more later. I am excited to say that now is the time!

Leave a Reply